Introduction

Businesses are creating more data now than ever before, with the amount of data we need to store doubling roughly every two years. This rapid growth makes it harder to manage and store data effectively without solid archiving strategies in place.

Data archiving is about moving less critical information out of active storage and into a long-term storage system. The main aim here is to keep the data for the long haul, and what’s key is that this data is moved from primary storage to a secondary one, not just copied. This is what sets archiving apart from backups.

In Salesforce, data archiving is a natural part of any business’s growth. All businesses collect data as they operate, and often, this information is more valuable than money or other resources. But keeping all that data in Salesforce can get expensive, so businesses generally face two options: deletion or archiving. Deleting data usually isn’t an option since it’s either crucial for the business or must be kept to meet compliance and regulatory requirements.

That leaves archiving as the best option. The costs of archiving can vary based on the storage type. For instance, archiving data in storage that’s easy to access anytime will cost more than using storage that’s harder to access quickly.

Data Archiving and Data Lifecycle

Data backup and data archiving tend to overlap a lot in the minds of regular users. However, there is also one more term that is very similar in its nature – the data lifecycle.

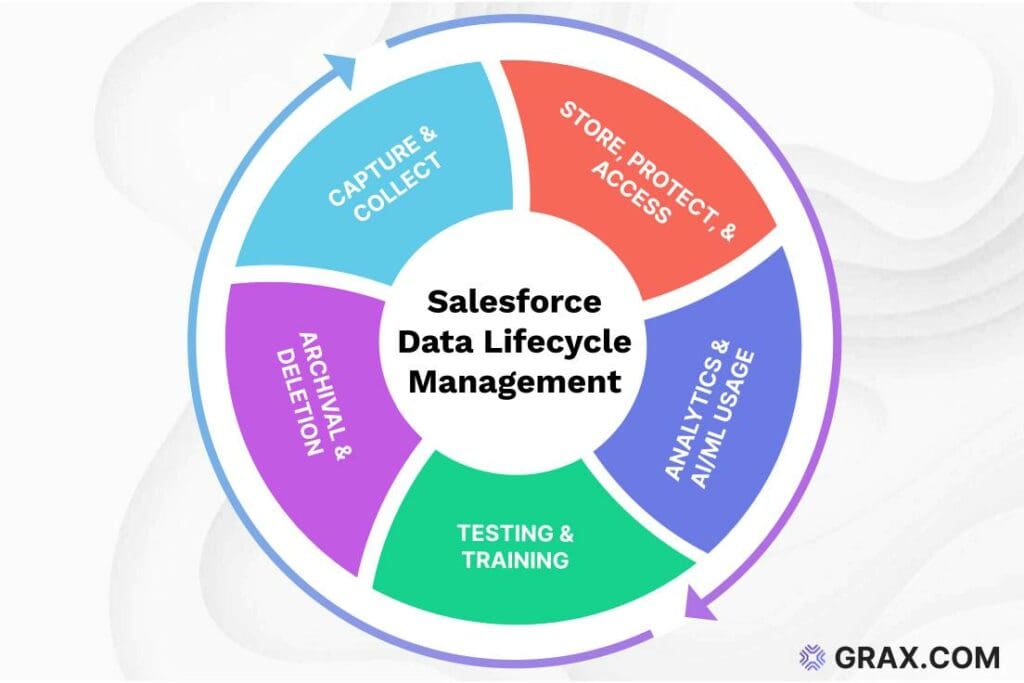

Data lifecycle management is a process of monitoring and improving the so-called “data flow” throughout its entire lifecycle, from initial data creation to its fall into obscurity and subsequent deletion. The average data lifecycle includes multiple separate phases, each with its own unique characteristics.

Data archiving is one of these phases. It covers a very specific moment that indicates a situation when information is not relevant or necessary enough to warrant it being kept in the primary storage. Thus, the process of transferring that information to a dedicated long-term storage is initiated.

That is precisely what data archiving is – information transfer to improve performance and convenience without losing access to the information in question. It is a much more specific term than data lifecycle, and the overall scope is the most significant difference between the two.

Data Archiving Key Benefits in Salesforce

Archiving as a process can be extremely beneficial to most businesses in multiple areas. Effective archiving environment can assist with data security efforts while reducing overall storage costs, boosting performance, and even assisting with compliance or legal matters. Data archiving in Salesforce is primarily used as an alternative to irreversible data deletion, which offers a number of substantial advantages:

- Archived data protection

Data archival can also play a part in a company’s disaster recovery strategy since many archival storage units are often placed separately from the primary storage location, both physically and logically. Reducing the total volume of information in production leads to lower overall damage to the organization from a data breach event, which puts even more emphasis on preparing correct data archival strategies.

Another aspect of data security as a topic that archiving can assist with lies in the ability to reduce the risk exposure during any event of data corruption or loss. Proper archival efforts can provide organized access to archived information in an off-site location, supporting disaster recovery efforts in case the primary data storage becomes affected by some sort of a disaster.

- Storage cost reduction

Being able to archive information that is not immediately necessary for the day-to-day operations of a business reduces the strain on the primary storage hardware while also drastically reducing the data storage fees from Salesforce (which tend to get quite high as time goes on, with each subsequent storage expansion growing in price at a startling pace).

- Overall performance boost

Not working at a limit of the storage capacity also tends to improve the performance of practically any system, including Salesforce. Additionally, the non-linear approach to the same performance concern is to recognize how it is a lot easier to locate necessary information in the company’s filesystem when there is less non-essential information being stored alongside sensitive files.

- Regulatory compliance

Industry regulations and compliance frameworks have become much more common in recent years. It is hard to find a company that is not aware of GDPR, PCI DSS, or any of several dozen frameworks being applied to specific industries or geographic locations.

It is important to understand that breaching compliance requirements is often not just a reputational issue. It is also both a massive legal risk and a high potential for a tremendous monetary fine. As such, being able to archive information in accordance with all of the necessary regulations can drastically remove the risk of any of these events happening.

When is it necessary to start creating the data archiving routine in Salesforce?

In a perfect scenario, the data archiving strategy should already be in place for any organization that has been using Salesforce for a substantial period of time. Interestingly enough, the aforementioned advantages of data archiving can also serve as the primary reasons for when it is time to start archiving information:

- Setting up a disaster recovery framework in the context of Salesforce must include data archival in some way, shape, or form. It is a vital part of a company’s data protection system, which is now at its most important, with the number of data breaches growing all over the world at a fast pace.

- Dealing with a hefty price tag for data storage in Salesforce is another dead giveaway for the data archiving strategy to be implemented. The storage fees of Salesforce might not seem all that high at first, but the total cost tends to get extremely high as time goes on and a business grows in size.

- Low performance and problematic data locations are both viable signs that data archiving should be used since both of these reasons are often connected with the abundance of data stored in a single environment (not all of the data stored in the primary storage is required daily, in most cases).

- Regulatory and legal concerns when it comes to data retention should also be a relatively clear sign of a company being required to implement all of the necessary information storage requirements, which often includes data archiving in long-term storage for a specific time period.

Backup vs Archiving in Salesforce

Backup process is another notable term that is often confused with archival, both inside and outside of the Salesforce environment. The most important distinction between them is their purpose, and it is not particularly difficult to understand:

- Backup processes create a copy of the information to be stored in another location without affecting the original.

- Archival processes move the information to be stored in a separate location, meaning that the information is no longer stored in its original environment.

Both processes are important for any environment that works with data regularly. There are at least three other points of distinction that we would like to go through here:

- Storage. Backups are kept in storage environments that are optimized to be retrieved as quickly as possible at a moment’s notice. Archiving, on the other hand, often uses cost-effective storage for long-term preservation, which does not offer the most compelling performance in many cases.

- Frequency. Backups are performed relatively frequently, with daily backups being commonplace to ensure that the information stored in backed-up environments is as up-to-date as possible (unlike most solutions, GRAX offers Continuous backups). Archiving, on the other hand, differs significantly depending on retention requirements and data lifecycle policies, although they are often much less frequent than backups.

- Access. Backups are kept in easily accessible formats with the purpose of being restored as quickly as possible when necessary. Archived data does not have to be addressed that frequently, and thus, it is often stored in an encrypted or even compressed format that does not allow for quick access at any point in time.

Say Goodbye to Salesforce Storage Overload

Discover how easy it can be to automate your archiving process, retain critical information, and stay compliant.

Best Practices for Data Archiving in Salesforce

The list below includes some of the most common pieces of advice that could be given to most data archiving environments. It should be noted that this list is far from conclusive, and there are many other best practices that would only be suitable and relevant to a very specific user group.

Process automation

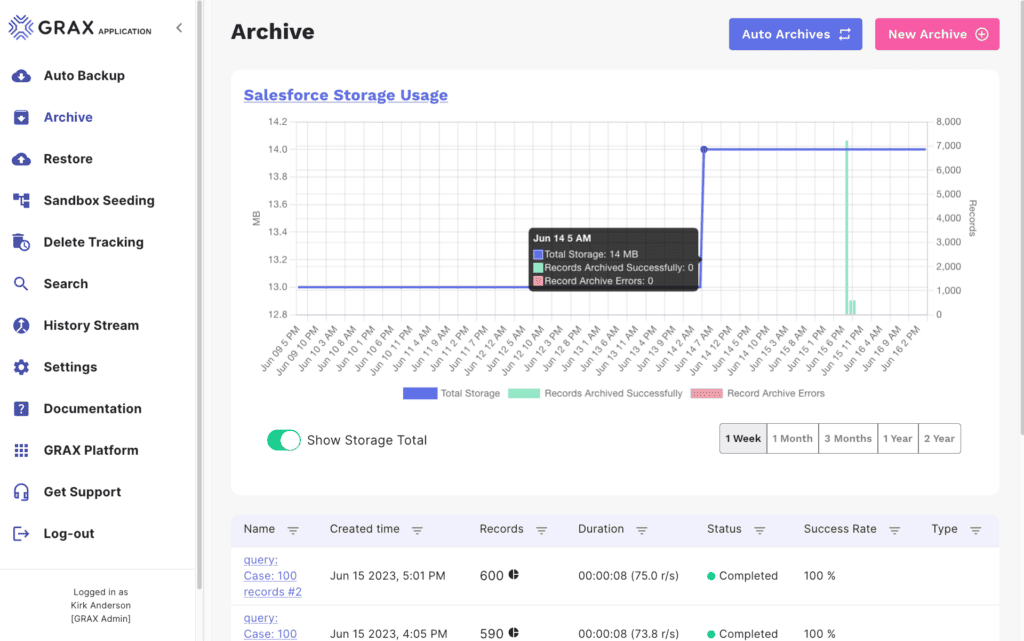

Data archiving does not have to be a completely manual process. It is possible for a custom data retention policy to define specific data categories and groups that could be transferred to the archival storage when necessary. The trigger event for the archival event could also differ significantly, from the total percentage of the Salesforce storage capacity being reached to something as basic as regular time intervals, such as a day, a week, a month, etc. Automation also tends to reduce the cost of archiving while also freeing up labor for other, more important tasks.

Data utility

Data archiving processes, when set up correctly, can be a significant advantage to a company’s data utility efforts. Setting up a data archiving system that can transfer less mission-critical information to a different storage environment offers plenty of advantages, from lower costs to higher efficiency and even better decision-making due to the removal of outdated insights and the ability to keep only the most relevant data in the rotation.

Additionally, archiving strategies can also be used as the means of gaining a better understanding of how the Salesforce data operates in your org by analyzing data usage trends and other similar metrics. There is no way to see how storage has changed over time in native Salesforce environment, making a third-party archiving solution with vast statistical capabilities the only way to acquire such information.

Data security

The very existence of a properly configured data archival policy in Salesforce is a substantial advantage to the company’s information security situation. However, the information in the archives should also be protected to a certain degree – with basic access controls and data encryption being some of the most rudimentary requirements that should be implemented in any such system.

The fact that the archived information in a client’s system is not considered essential for a company’s functioning does not mean that it does not hold value to malicious agents such as hackers. This is also combined with the fact that a lot of the archival data is often subject to one or several regulatory frameworks, leading to plenty of issues we have mentioned before – reputational damages, fines, and legal actions.

Off-platform data storage

Despite the fact that Salesforce has a native archiving offering that removes information from search results, it does not remove it from the overall Salesforce storage. The reason why the topic of storage cost reduction is important here is because of how expensive Salesforce’s internal storage tends to be.

On average, Salesforce’s basic data storage cost is $250 per month per 1 Gigabyte, with the ability to purchase additional storage for the same price. The file storage, on the other hand, is priced at $5 per Gigabyte per month and offers 10 Gigabytes of storage to any paid user from the get-go. [1]

In this context it should be easy to see how the total cost of internal storage in Salesforce can become an unbearable burden for many companies. As such, there is always an option to use one of many public cloud storage environments as backup or archival storage environment with the following price tags:

- Azure from Microsoft – $0.021 per one Gigabyte of data or file storage. [2]

- AWS from Amazon – $0.023 per one Gigabyte of data or file storage. [3]

- Cloud Platform from Google – $0.020 per one Gigabyte of data or file storage. [4]

Archiving strategy

Defining your goals for data archival is a rather important part of the entire process. Any of the previously mentioned objectives could be used for this reasoning, be it regulatory compliance, high storage costs, low system performance, and so on.

These objectives can help with establishing what specifically needs to be archived and when – including factors such as usage frequency, data age, relevance for business processes, and more. Also, make sure that all of the stakeholders have a clear understanding of both the benefits and the objectives of this archival strategy since support and understanding are crucial for the archiving strategy to be implemented properly.

Data relationships

The very nature of data archiving implies that there still should be integrity between archived information and the data left in the primary storage. The so-called “parent-child” relationship between information elements is extremely important in environments such as Salesforce due to its common interactions between data and metadata.

Making sure that data connections are safe is mandatory to maintain the context of existing data without breaking its integrity as a result of archiving. The same logic applies to the restoration processes, as well – make sure to define data restoration strategies that would be able to restore information without breaking the pre-existing connections between information in different storage mediums.

Failure to preserve this connection may result in data being retrieved in an incomplete stage, along with a number of other data integrity implications.

Frequent testing

Archiving processes are not one-and-done efforts; they are ongoing processes that need to be monitored and tested on a regular basis to ensure their proper functioning. Reviewing audit logs for data archiving on a regular basis drastically improves the chances of spotting and solving various issues or anomalies before they can produce any damage to the storage or infrastructure.

Keeping track of key performance metrics such as storage utilization and system response times is another good idea, allowing for a somewhat rudimentary efficiency assessment before adjusting existing archiving strategies when necessary.

Certified solutions

While Salesforce does offer a number of built-in solutions for data archiving, all of them have substantial disadvantages that make them difficult to use in a large-scale enterprise environment. As such, it is highly recommended to use third-party archiving solutions in order to receive seamless integration and incredible performance.

Salesforce AppExchange offers a number of data archiving tools to choose from, with each tool being vetted by Salesforce to ensure reliability and effectiveness in a unique Salesforce storage framework. Reliable vendor support is also an important factor to look for here since they are responsible for regular updates, customer support, and more.

AI-Powered Archiving Intelligence

While the aforementioned best practices do form the foundation of effective archiving processes, the role of emerging technologies is also not to be underestimated. The integration of technologies such as Artificial Intelligence into Salesforce archiving workflows is a dramatic leap forward in terms of how companies can control and improve their data lifecycle.

Modern archiving systems with AI-powered capabilities are capable of transforming existing rule-based archiving workflows into adaptive and flexible processes that can be continuously optimized without disturbing any of the relationships or accessibility options.

Archival Candidate Identification Using Artificial Intelligence

AI algorithms have the potential to analyze a variety of data points at once, identifying optimal archival candidates in a short time frame. These systems tend to be much more granular and customizable when compared with simple usage-based or age-based filtering rules since they can work with the following patterns in mind:

- Retention policies or compliance requirements;

- Complex dependencies and data relationships;

- Cyclical business processes and seasonal usage patterns;

- Access frequency across different user roles or departments;

- Business value indicators from historical data utilization.

AI systems also tend to have the ability to learn from organizational behavior as time goes on, offering better predictive accuracy in terms of archival targets. Such a dynamic approach makes sure that actual business needs and archiving decisions remain aligned at all times.

Storage Optimization with Predictive Analytics

It is not uncommon for modern archiving solutions to provide storage management optimizations using predictive analytics, including the following options:

- Cost implication analysis for different storage strategies.

- Future storage needs forecast using historical patterns of data growth.

- Storage tier optimization with the help of predicted access patterns.

- Optimal timing identification for archival tasks in order to lessen the overall system impact, and so on.

Predictive capabilities like these make it possible for organizations to switch from reactive to proactive storage management. Such a drastic change in approaching storage optimization has the potential of saving substantial costs without reducing overall system performance.

Machine Learning and Context Preservation

The ability to maintain the context and relationships for archived Salesforce data is one of the most substantial challenges in the data archiving field. Machine Learning algorithms can be a potential solution to this issue using the following capabilities:

- Metadata and context maintenance even when the primary information is archived.

- Complex data relationship mapping for objects and records.

- Automatic data tagging and categorization.

- Ability to look for hidden dependencies that the traditional rule-based algorithm might have missed.

The information archived with such an approach can maintain its value and context in business environments, no matter if it is accessed for a single purpose or restored in its entirety.

Continuous Optimization and Improvement

AI-powered archiving processes have practically no limitations in terms of their growth over time due to a large number of processes that can analyze and optimize the entire environment. This kind of categorization includes feedback loops from user interactions with archived data, adaptation to changing compliance requirements, archival and retrieval pattern analysis, performance optimization based on system impact analyses, etc.

The continuous learning process exists to make sure that the efficiency of the archiving strategy grows over time without moving away from organizational needs. AI-powered solutions are the cutting edge of archiving technology, but they still require a solid understanding of different fundamental tools and methods that exist in the Salesforce ecosystem. However, before we move on to exploring what Salesforce can offer in its native archival toolset, it would also be valuable to talk about how data archival processes are influenced by the topic of cross-platform synchronization.

Cross-Platform Data Synchronization Challenges

As business environments grow in complexity, many companies begin to work with several Salesforce instances at once, be it for different regions, divisions, or acquired companies. A multi-org reality like that has its fair share of unique challenges in data archiving, necessitating a complex synchronization strategy to maintain consistency of information without losing on its accessibility.

The Multi-Org Archiving Architecture

Archival management across more than one Salesforce organization would be extremely difficult without careful planning and a solid architecture that can address a number of important considerations:

- Data management mastery with authoritative sources and consistent data identifiers.

- Optimal synchronization frequency that provides perfect timing for cross-platform status updates to find the right balance between data relevance and system load.

- Cross-org access control environment with a selection of security measures that can respect organizational boundaries and enable necessary data sharing.

- A choice between distributed and centralized archival storage, with consolidation and separate storage rules having their own unique advantages and shortcomings.

Hybrid Cloud Archiving Environments and their Challenges

Modern-day enterprises rely on hybrid environments in most cases, combining a selection of cloud storage platforms with on-premises environments for a variety of specific use cases. Such complexity also brings in a number of challenges that everyone would have to keep in mind:

- The performance impact of data synchronization across several physical and virtual locations.

- A delicate balance between storage costs in different platforms and necessary performance parameters.

- Archive management in different geographic regions with local data sovereignty laws in mind.

- Flexible backup and failover mechanisms for all platforms involved.

Some of the most complex scenarios for such environments would be different merger and acquisition situations where a global corporation acquires regional businesses, facing the challenges of merging distinct environments into a single cohesive framework that can operate with sufficient power and flexibility.

Best Practices for Data Synchronization Across Platforms

Successful cross-platform archive synchronization can be achieved with the help of certain best practices that have emerged with the rise of multi-platform archival situations. The most noteworthy examples of such challenges are unique global identifiers that work across all platforms and archives, and a variety of environment-wide rules for data conflict resolution.

Maintaining comprehensive logs of data movements between platforms is also highly recommended, and the same could be said for various monitoring systems that track the performance and synchronization metrics for the entire environment.

The success of cross-platform information synchronization in relation to archiving relies a lot on detailed planning, continuous monitoring, and a robust infrastructure. The ability to maintain consistent and accessible archives across several platforms remain extremely important to business operations as companies continue to grow and evolve. Now that we are done covering the topic of multi-platform archiving processes, we can begin the showcase of archiving tools, starting with Salesforce’s native options.

Existing Archival Methods in Salesforce

Generally speaking, none of the built-in data storage methods in Salesforce can be considered archiving. The closest example is Salesforce Big Objects, and even that still has plenty of differences with how data archival should be performed. There are also some nuances to the platform as a whole that are worth explaining here, and we will also discuss the reasons why these built-in measures are not recommended for archiving in most cases.

First of all, there is a certain amount of data (and file) storage that Salesforce provides to its users for free. The exact volume depends on the total number of user licenses in one environment, as well as the “edition” of the software purchased. Salesforce separates its storage capacity into two large groups:

- File storage is used for documents, files, attachments, etc.

- Data storage is used for contacts, custom objects, accounts, etc.

It is technically viable for some companies to stay within the “free” limitations of Salesforce storage. However, it becomes harder and harder as time goes on, and the volume of data the business works in is also expanding.

There is also a somewhat helpful feature called Field History Tracking, which works as the local versioning system, making it possible to track changes to both standard and custom object records. It can be enabled manually, but it can also consume a lot of storage capacity if not configured correctly.

Salesforce Big Objects and Other Archiving Strategies

Salesforce Big Objects is a system that allows Salesforce users to store tremendously large data volumes within the platform through a process that closely resembles data archiving. The official goal of Big Objects is to be used for information that has to be retained for either regulatory compliance or historical reference.

The size of Big Objects means that querying them is only possible using Async SOQL, which also makes querying these objects possible in the background without sacrificing system performance. It is also much more difficult to retrieve this data compared to standard object retrieval due to the requirement of using asynchronous processing to do so.

Alternatively, companies can use simple data extraction processes as the means of archiving the most valuable Salesforce information. Salesforce allows for data extraction to be performed in the form of .CSV files using either built-in capabilities or third-party tools. Such information can be stored as regular files in an external system reducing the load on internal infrastructure as a result. However, the complexity and manual nature of such a process makes it unlikely to be a primary archiving option at scale.

External storage is a common factor for these strategies, such as the usage of custom external objects, which makes it possible to store information outside of Salesforce’s internal storage environment but requires a lot of customization to set up and use. Since each external object in Salesforce would have to have an external data source associated with it, specifying how they can access the external environment, there are plenty of concerns in regards to data security and general effectiveness when it comes to this method.

Open source cloud platforms can also technically be used as target locations for archival processes. For example, Heroku-based services can archive historical data from the Salesforce environment using a bi-directional synchronization and a Heroku Connect add-on that can manage Salesforce’s accounts, contacts, and other custom objects with the Postgres database. It would be fair to mention that Heroku is capable of much more than just archiving – it can also offer business intelligence, CRM multi-organization consolidation, and a selection of other features.

Limitations of Built-In Archiving Capabilities of Salesforce

Unfortunately, the fact that Big Objects is provided directly by Salesforce does not mean that it is a perfect tool for archival purposes. On the contrary, there are plenty of disadvantages that users would encounter when trying to perform data archival tasks purely with the built-in methods, including:

- Complex setup processes

- Problematic ongoing management

- Limited feature set

- Difficult integration with external systems

- Challenging data access, and more.

The sheer number of limitations makes it easy to see why most Salesforce users prefer to work with third-party archiving tools instead of trying to operate Big Objects with increasingly complex queries and without most of the Quality-of-Life changes that third-party software has.

Benefits of a Third-Party Salesforce Archiving Solution

As an example, GRAX can resolve all of the issues and shortcomings mentioned above, including:

- Setup process simplification with a user-friendly interface makes it a lot easier to configure archival software, especially with the help of intuitive workflows and guided configurations.

- Ongoing management improvements due to GRAX’s ability to automate plenty of menial and repetitive tasks. Some of the more common examples of features here are scheduled archiving, data retention policies, and ongoing monitoring for data archival processes.

- Rich feature set makes it possible for GRAX to provide custom retention policies, historical tracking, advanced search and retrieval, versioning, etc.

- Complete support for Big Objects data, making it possible to migrate all of the data from Big Objects without losing access to it.

- Simplified integration with various external systems and platforms greatly expands the possibilities for data archival while improving upon the overall data utility, since the integration can be done with warehouses, data lakes, and a number of other SaaS applications.

- Easy access to archived information is guaranteed in GRAX with intuitive data retrieval capabilities and advanced search capabilities, making it extremely easy to locate and restore necessary information without using complex queries like in Big Objects.

Other capabilities of third-party software like GRAX that may be useful in different archival situations are accessible monitoring and reporting, storage scalability, multiple information security measures, comprehensive audit trails, and many others.

Implementing Archival Strategy in Salesforce

Creating a viable archival strategy in a Salesforce environment can be rather challenging. Luckily, we can offer a relatively small implementation guide on this exact topic. There are going to be five major steps in total:

- Data assessment

Before initiating the archival process, it is necessary to understand what data types and volumes can be archived without affecting regular operations. Business relevance and access frequency are usually the most common factors that determine what data can be archived and what has to remain in the primary storage.

This is also where all of the internal retention policies and regulatory frameworks have to be reviewed to ensure that all of them are taken into account.

- Picking the right tool

The usage of built-in tools and features such as Field History Tracking and Big Objects is only viable for businesses as short-term solutions for the reasons we have mentioned above. The long-term solution would have to be a third-party tool from the Salesforce AppExchange – that way, the archival solution would be guaranteed to be tested and compatible with the Salesforce environment from the beginning.

Two important factors are worth considering here: the scalability and the price of the solution. The former factor is important for companies expecting substantial growth in the near future, while the latter factor depends entirely on the size of the budget that was assigned to the data archival solution.

- Archiving ruleset

It is necessary to make the archival rules as detailed and thorough as possible to make sure that there are no errors with the archival process. The most common recommendations are to use retention policies and job scheduling to simplify the entire process as soon as the basic requirements in terms of the data are set.

There are three large groups of criteria that can be used to define what data has to be archived:

- Business-specific criteria, be it customer lifecycle stages, project completion, etc.

- Usage-based criteria to filter out information that has not been accessed for a specific time period.

- Age-based rule set covers all of the information that has been created a specific period of time ago (such as 1 year, 2 years, etc.).

Making sure that archiving rules preserve the relationships between active and archived information is also a good idea, especially when it comes to the data-metadata communication inside Salesforce.

- Team training

Every single member of the team needs to be aware of how important data archiving is. When done right, all team members should be aware of how to apply the archiving rules when necessary. Creating comprehensive documentation and other resources on the subject is also a great idea in this context, as well as performing regular training sessions in order to solve any of the potential questions that might have arisen.

- Ongoing optimization

Data archiving is not a one-and-done process. It needs to be audited and monitored regularly to ensure that all of the policies and rule sets are applied correctly. Monitoring system performance and archival process performance are both viable sources of information at this stage, offering plenty of information that may be required to optimize the process even further.

Industry-Specific Archiving Patterns

Even though a lot of core archiving principles are consistent across different sectors, there are also plenty of industries that have their own unique challenges and requirements in the field of data archiving. It is extremely important to have a deep understanding of these industry-specific patterns in order to improve the efficiency of archiving processes that can balance operational efficiency and compliance with ease.

Archiving in the Healthcare Industry

Healthcare industry deals with extremely sensitive patient data on a regular basis, which is also subject to regulatory requirements such as HIPAA. There are certain data retention requirements that must be followed here, including:

- 6 years of patient records depending on the state.

- 5-10 years for diagnostic images.

- Pediatric records have to be stored until the patient reaches age of majority plus statute of limitations.

- 10 years from the last date of service for Medicare/Medicaid records.

Specialized data storage considerations in this industry include encryption requirements in regards to Protected Health Information, audit trail preservation for patient data, emergency access protocols for archived patient data, and a mandatory integration with Electronic Health Record systems.

The most noteworthy key performance indicators in this industry include access audit completeness, data encryption verification, archive validation success rates, and time to retrieve patient records.

Archiving in Financial Services

Financial institutions have their own regulatory frameworks that have to be followed, while maintaining quick access to historical transaction information. The retention patterns for this industry are:

- 5 years after account closure for customer identification data.

- 3-6 years for communication records depending on data type.

- 5-7 years for transaction records, varying by jurisdiction.

- 7 years for all trading records, as mandated by SEC.

The compliance requirements of the financial industry include FINRA requirements for communication activities, compliance with SEC Rule 17a-4 for electronic storage, compliance with MiFID II for European operations, and multiple record-keeping obligations according to the Dodd-Frank Act.

The most noteworthy key performance indicators of the financial industry are data immutability rates, archive search response times, storage cost per Gigabyte, and retrieval accuracy rates.

Archiving in Retail and E-commerce

Retail organizations have a fair share of requirements in their own field, necessitating a balanced approach that can maintain operational efficiency and customer data retention in a single environment. It necessitates the following retention requirements:

- 3 years of storage for inventory and supply chain records.

- Loyalty program data has to be kept for the duration of program participation and for at least two years after that.

- 3-5 years for customer purchase history.

- All the relevant requirements of PCI DSS in regards to payment data.

When it comes to retail and e-commerce, companies would also have to consider product lifecycle tracking, supply chain audit requirements, cross-channel customer data integrations, and seasonal patterns in terms of data access.

The primary performance metrics for archiving in retail include storage cost per customer record, speed of customer profile access, time to retrieve order history, and archive compression ratio.

Archiving in Manufacturing

Industrial and manufacturing businesses have substantial requirements in terms of complex product lifecycle management, including data archival rules and the necessity to maintain the compliant state of these records. Their retention requirements are as follows:

- All equipment maintenance logs have to be stored for the entire lifespan of each equipment element and five years after that.

- Product specifications have similar requirements, necessitating the storage period for the entire lifetime of a product and ten more years after that.

- 5 years of storage for safety incident reports, according to OSHA requirements.

- 7-10 years of retention for quality control records.

The industry-specific needs of manufacturing businesses include supply chain traceability records, CAD/CAM file version management, compliance documentation for ISO 9001, Bill of Materials relationship preservation, and more.

As for the relevant success metrics – they include archive integrity verification rates, cross-reference accuracy percentages, technical document retrieval time, and storage efficiency ratios.

Good understanding of the industry-specific archival patterns can greatly improve archiving strategies, making it possible to deliver optimal value in each sector while also meeting regulatory requirements. Regular updates for these patterns are also recommended to ensure continued alignment with the newest industry requirements and standards.

GRAX Case Studies in Salesforce

Global Computer Tech Company

This Global Computer Tech Company is a large business in the fields of Information Technology, Digital Transformation, and Security Transformation. It needed to manage and reuse a number of mission-critical datasets from instances such as Salesforce Service Cloud, Sales, and third-party data sources in their data lake. The company generates and updates over 18M contacts and 64M cases on a regular basis, with the total data volume in Salesforce of about 2TB. It caused plenty of slowdown issues and time-outs, making it more difficult to access and reuse information when necessary.

The introduction of GRAX made it possible to drastically improve Salesforce performance by automating data archival jobs, allowing the Sales and Support teams access to archived information and the ability to review and analyze the data in question for future sales or customer interactions. The optimized data storage also made it possible to analyze the information itself using AWS Quicksight, Redshift, and Glue in order to provide useful insights on how an Executive Team can improve the company’s results in the future.

Conclusion

Data archiving is the process of moving less critical information to long-term storage, enabling freeing up space and improving system performance. While many people confuse this with backups, archiving has a different and important place in a business data management strategy.

Archiving is especially paramount in the Salesforce environment, the benefits of which apply to organizations of all sizes. If appropriately executed, it turns data archival into an important part of an organization’s infrastructure, combining exceptional performance without losing access to information that might not have many use cases in day-to-day operations.

However, archiving is not a “set it and forget it” kind of activity. It requires regular monitoring and occasional review if it is going to remain effective. As a matter of fact, feedback is an important element in the process of making improvements, hence discovering what may need to be changed in order to keep it effective for future needs within the organization.

An early start for an archiving strategy can facilitate the growth of any Salesforce environment. Knowing when one should act and be prepared in dealing with data storage will save one from getting into a mad scramble to find a solution at that particular point of capacity or performance issues.

Don’t let your data sit in cold storage 🥶

Speak with our product experts to see how archive can play a bigger role in your data management strategy.

[1] Additional storage space can be purchased in blocks of 50 or 500MB at $125/month for 500MB of additional data storage.

Source: https://www.findmycrm.com/blog/crm-overview/how-much-does-salesforce-cost.

[2] Pricing for Hot for first 50 terabyte (TB) in East US Source: https://azure.microsoft.com/en-us/pricing/details/storage/blobs/.

[3] Pricing for S3 Standard for the first 50 terabyte (TB) in US East (Ohio)Source: https://aws.amazon.com/s3/pricing/.

[4] Pricing for Standard Storage in South Carolina (us-east1)Source: https://cloud.google.com/storage/pricing.